This blog post is part of our data centre networking series:

- Data centre networking : What is SDN

- Data centre networking : SDN fundamentals

- Data centre networking : SDDC

- Data centre networking : What is OVS

- Data centre networking : What is OVN

- Data centre networking : SmartNICs

With the development of open source software-defined networking solutions, virtualisation took a progressively more important place in modern data centres. Concepts like virtual switching and routing became part of the data centre networking scene, with OVS as a pioneering example. Virtual switches nevertheless did initially miss very important networking features and standards, which hardware-based network devices already had – and which were proven and widely implemented. OVN came to represent those network features in virtual switching environments and address their extensibility across multi-host clusters. Let’s first take a closer look in order to understand what OVN is.

What is OVN?

OVN, the Open Virtual Network, is an open source project that was originally developed by the Open vSwitch (OVS) team at Nicira.

It complements the existing capabilities of OVS and provides virtual network abstractions, such as virtual L2 and L3 overlays, security groups, and DHCP services. Just like OVS, OVN was designed to support highly scalable and production-grade implementations. It provides an open approach to virtual networking capabilities for any type of workload on a virtualised platform (virtual machines and containers) using the same API.

OVN gives admins the control over network resources by connecting groups of VMs or containers into private L2 and L3 networks, quickly, programmatically, and without the need to provision VLANs or other physical network resources.

The OVN project was announced for the first time in January 2015, and had its first experimental release in February 2016. The first supported release was announced in September 2016.

Motivations and OVN features

The most popular virtual switch in OpenStack, Open vSwitch (OVS) was developed to provide low-level networking components for server hypervisors. To make OVS more effective in various network environments, OVN was built to augment the low-level switching capabilities with a lightweight control plane that provides native support for common virtual networking abstractions.

OVN provides a production-grade straightforward design with high scalability to extend OVS capabilities to thousands of hypervisors. It shows improved performance and stability over existing OVS plugins without requiring any additional agents for deployment and debugging. OVN is compatible with DPDK-based and hardware-accelerated gateways, and is already supported on switches from Brocade, Cumulus, Dell, HP, Lenovo, Arista, and Juniper.

OVN provides many virtual network features varying from layer 2 to higher network services like DHCP and DNS. Here is a high level view of its key supported capabilities:

- VLAN tenant networks are supported by the networking-ovn driver when used with OVN (version 2.11 or higher).

- Trunk service plugin is supported when the Trunk driver uses OVN’s functionality of parent port and port tagging. The ‘trunk’ service plugin needs to be enabled in neutron configuration files.

- A native implementation of Layer 2 Switching, which replaces the conventional Open vSwitch (OVS) agent.

- A native implementation of layer 3 routing with a support of distributed routing. This replaces the conventional Neutron L3 agent. L3 gateways from logical to physical networks are also supported.

- Security groups with support for L2/L3/L4 Access Control Lists (ACLs).

- A native distributed implementation of DHCP, which replaces the conventional Neutron DHCP agent. The native implementation nevertheless does not yet support DNS or Metadata features.

- Multiple tunneling protocols and overlays are supported, like Geneve and STT.

- OVS can be used with OVN and networking-ovn, either using the Linux kernel datapath or the DPDK datapath.

- Since OVN version 2.8, a built-in DNS implementation is supported.

- For integration with OpenStack, a good number of Neutron API extensions are supported with OVN.

- Support for software-based as well as Top of Rack-based Layer 2 gateways that implement the hardware_vtep schema.

- Networking for both virtual machines and containers running inside them can be provided by OVN, without an additional overlay networking layer.

- Integration with OpenStack and other Cloud management systems (CMS).

- Hardware offload capabilities: OVN uses OVS for data processing, where OVS can be hardware offloaded (e.g. Mellanox ConnectX-5(+) cards), or DPDK based offloaded on Intel NICs.

OVN architecture and fundamentals

The main components of OVN can be seen either under a database or a daemon category:

The OVN databases:

- Ovn-northbound ovsdb represents the OpenStack/CMS integration point and keeps an intermediate and high-level of the desired state configuration as defined in the CMS. It keeps track of QoS, NAT, and ACL settings and their parent objects (logical ports, logical switches, logical routers).

- Ovn-southbound ovsdb holds a more hypervisor specific representation of the network and keeps the run-time state (i.e., location of logical ports, location of physical endpoints, logical pipeline generated based on configured and run-time state).

The OVN daemons:

- ovn-northd converts from the high-level northbound DB to the run-time southbound DB, and generates logical flows based on high-level configurations.

- ovn-controller is a local SDN controller that runs on every host and manages each OVS instance. It registers chassis and VIFs to the southbound DB and converts logical flows into physical flows (i.e., VIF UUIDs to OpenFlow ports). It pushes physical configurations to the local OVS instance through OVSDB and OpenFlow and uses SDN for remote compute location (VTEP). All of the controllers are coordinated through the southbound database.

More details on OVN components can be found at this link.

OVN uses databases for its control plane and takes advantage of their high scaling benefits. It conveniently uses ovsdb-server as its database without introducing any new dependencies since ovsdb-server is already on each host where OVS is used.

Each instance of ovn-controller is always working with a consistent snapshot of the database. It maintains an update-driven connection to the database. If connectivity is interrupted, ovn-controller will catch back up to the latest consistent snapshot of the relevant database contents and process them.

Logical flows

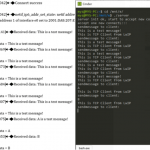

Here is how north-south DB flow population works:

OVN introduces an intermediary representation of the system’s configuration, called logical flows. Logical network configurations are stored in Northbound DB (e.g. defined and written down by CMS-Neutron ML2). The logical flows have a similar expressiveness to physical OpenFlow flows, but they only operate on logical entities. Logical flows for a given network are identical across the whole environment.

Ovn-northd translates these logical network topology elements to the southbound DB into logical flow tables. With all the other tables that are in the southbound DB, they are pushed down to all the compute nodes and the OVN controllers by an OVSDB monitor request. Therefore, all the changes that happen in the Southbound DB are pushed down to the OVN controllers where relevant (this is called ‘conditional monitoring’) and the chassis hypervisors accordingly generate physical flows. On the hypervisor, ovn-controller receives an updated Southbound DB data, updates the physical flows of OVS, and updates the running configuration version ID.

On the hypervisor level, when a new VM is added to a compute node, the OVN controller on this specific compute node sends (pushes up) a new condition to the Southbound DB via the OVSDB protocol. This eliminates irrelevant update flows.

Ovn-northd keeps monitoring nb_cfg globally and per chassis, where nb_cfg provides the current requested configuration, sb_cfg provides flow configuration, and hv_cfg provides the chassis running version.

Status flows

The status flow population is from south to north and works in the following way:

The Status information flows from south to north. Ovn-northd populates the UP column in the northbound DB per virtual port (v-port), and OVN provides feedback to CMS on the realization of the requested configuration. Ovn-northd monitors nb_cfg globally and per chassis. CMS checks the nb_cfg, sb_cfg, and hv_cfg versions to measure configuration request implementation progress.

Migrating from OVS to Charmed OVN

One of the major enhancements Canonical has delivered since the Charmed OpenStack 20.10 release is a procedure for migrating to OVN for deployments using OVS. This allows Charmed OpenStack users to easily migrate from OVS to OVN and enjoy the benefits of OVN, such as native support for virtual network abstraction and better control plane separation. They thus obtain a fully functional Software-Defined Networking platform in its Charmed OpenStack environment.

The entire migration process is automated and encapsulated in open source operators (Charms) that provide a high level abstraction of infrastructure operations. DevOps teams only need to adjust the size of their Maximum Transmission Unit (MTU) to enable the Geneve encapsulation used by OVN, deploy OVN components and the Vault service, and initiate migration. Once the migration is complete, users can safely remove redundant components such as Neutron OVS and Neutron Gateway agents from the Juju model.

Another interesting work that Canonical did is to enable OVN on SmartNICs and DPUs.

More details can be found about Charmed OpenStack and Charmed OVN at this link.

What is next?

The next blog will be dedicated to SmartNICs as one of the most interesting and future topics that will influence the modern data centre landscape.

Discover more from Ubuntu-Server.com

Subscribe to get the latest posts sent to your email.