Designing a production service environment around Apache Kafka that delivers low latency and zero-data loss at scale is non-trivial. Indeed, it’s the holy grail of messaging systems. In this blog post, I’ll outline some of the fundamental service design considerations that you’ll need to take into account in order to get your service architecture to measure up.

Let’s start with the basics.

Foundation services for Apache Kafka solutions

Foundation services are things like Network Time Protocol (NTP) services, Domain Name Services (DNS) as well as network routing, firewalling and zoning. You’ll need to get this right before even considering moving onto the application deployment itself. Now, I know what you’re saying – “I’m deploying Kafka on the cloud, this stuff is all taken care of, nothing to see here”. Well, not quite.

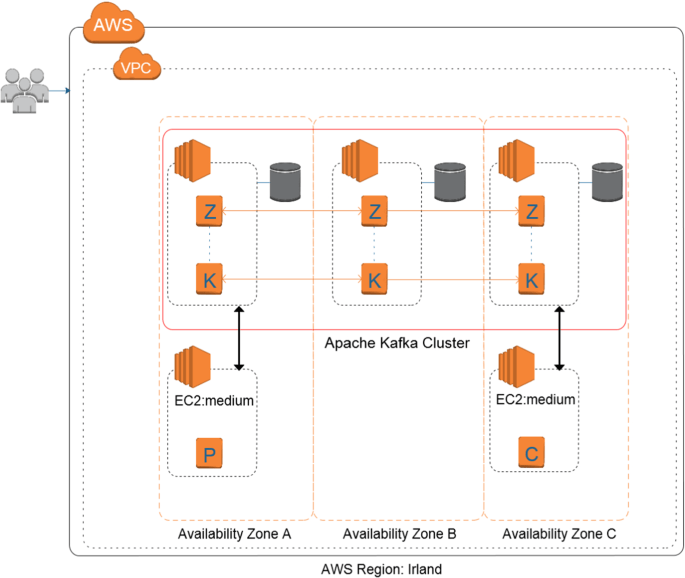

Even on the cloud, you’ll need to ensure that your VPC environment has network routing configured correctly, that firewall ingress and egress rules are appropriate and functional, and that your network zoning design enables the communications flows that your Kafka-based applications will expect. For instance, client producers and consumers typically need to be able to access all Kafka brokers in the cluster. You may also need to ensure that your cloud’s DNS service is appropriately configured, if you’re using such a service.

For on-premise deployments, you’ll most certainly also need to take care of NTP services. Close time synchronisation is pretty important for distributed, clustered applications like Apache Kafka. When using virtualisation and private cloud solutions like Charmed OpenStack, it’s essential to ensure that guest VM time is synchronised to a reliable and stable (ideally external to the hypervisor cluster) time source, or time can slew steeply between machines, and in some cases guest VM time might even jump forwards and backwards, causing nothing short of total chaos for your Apache Kafka deployment.

When you have worked out the foundation services plan for your deployment, the next step will be to plan the application deployment architecture for your Kafka solution.

Kafka application deployment architecture

Kafka can be deployed in a stretch cluster pattern for high resilience scenarios, whereby nodes of the same cluster are distributed across multiple data centres. The same data centres can of course also host multiple clusters; for example a primary and a backup Kafka cluster can be deployed side by side with replication between the clusters, in order to minimise downtime during service interventions. As always, there are tradeoffs to be considered here. Cross data centre replication within the same cluster will consume considerable network capacity in the case of a Kafka Broker node failure, in order to rebuild the partitions that were hosted elsewhere on the cluster. You’ll also need high performance network interconnects between sites or your Kafka producer applications will suffer from latency during writes.

Alternatively, you might consider an architecture with multiple site-specific Kafka clusters, using asynchronous mirroring between sites. Again there’s a tradeoff that you’ll need to take a decision on: between availability, integrity and cost.

The diagram below illustrates a stretch cluster deployed across three sites. Site “A” and site “B” host Kafka Broker and Apache ZooKeeper, whilst site “C” only hosts ZooKeeper and acts as an arbiter to prevent “split brain” occurrences. “Split brain” situations occur when a network partition event separates Site A from site B, causing each site to assume the loss of the other, and to continue to serve (potentially stale data) and process data in a way that can cause irreconcilable data conflicts once network connectivity is reestablished.

An important consideration with this type of deployment is network capacity between the sites. The type of site-to-site links in play, the total capacity of the links, as well as the committed capacity that will be available to the Kafka service for intra-cluster replication traffic all need to be carefully assessed.

Ubuntu Server host design

The overall performance of a Kafka solution can be informed to a considerable extent by the layout of the host servers. Choice of operating system, memory capacity, storage class, volumes and file system can all affect the performance of the solution to varying degrees.

For instance, the performance of consumer applications processing data from Kafka Brokers where Transport Layer Security (TLS) is enabled can benefit greatly from deployment on a Linux-based platform like Ubuntu Server 22.04 LTS, which is distributed with a maintained and stable OpenSSL implementation.

Selecting a high performance filesystem, such as the XFS filesystem for the volumes hosting Kafka log data brings the stability and significant performance advantages of this mature filesystem to your Kafka deployment.

To ensure the stability and performance of individual Kafka broker nodes within the cluster you need to configure the systems with sufficient memory to host both the Kafka broker process (which typically needs around 6GB of allocated memory for the Java JVM heap). You will also need to ensure sufficient memory for the operating system so that it can build an effective pagecache containing Kafka’s log data.

Other aspects you’ll need to consider when designing your Ubuntu Server hosts for Kafka – while not strictly a concern for low latency deployment – may include system hardening – one example being to ensure that all unnecessary privileges are revoked. You’ll also need to tune your OpenJDK JRE (Java Runtime Environment) installation appropriately – using an appropriate garbage collector and its associated configuration.

Detailed guidance on dimensioning the cluster to ensure that enough Kafka broker nodes are deployed to meet load, latency and availability objectives is outside the scope of this blog post, but this is an exercise you will need to complete to ensure your Kafka service is right-sized for your use case. Even in an elastic cloud scenario, where capacity extensions can be highly automated and take only a few minutes, you will still need to have a clear understanding of the total cost of the running solution, so this step really mustn’t be skipped.

Deployment observability

There will be times when you need to troubleshoot – both during the initial proof of concept phase as well as the running production service. For this you will need sufficient monitoring and diagnostics integration. Typically this means enabling metrics export on the Java JVMs so they can be collected and processed using tools like Telegram and Prometheus, deploying log aggregation solutions like syslog-ng, and potentially even using tcpdump for network traffic inspection.

With an observability solution like the Canonical Observability Stack (COS Lite) this turns into a low effort endeavour where the only step needed is to relate Kafka to a deployed telemetry collector, like COS Proxy for machine deployments, or Grafana Agent for Kubernetes.

Production Kafka service design wrap-up

Designing a production Kafka service for low-latency, high-volume, zero-data loss use cases is no mean feat, but by taking a holistic, systematic approach to service design, you can build a robust service that offers minimal downtime whilst delivering low-latency at scale.

Canonical has extensive expertise on deploying Apache Kafka and offers fully managed services for Apache Kafka, both on-premise and in the cloud, designed and tailored to your unique needs.

Get in touch with us and let us know your requirements.

Learn more about Canonical solutions for Apache Kafka.

Discover more from Ubuntu-Server.com

Subscribe to get the latest posts sent to your email.